Teaching AI to manipulate objects using visual demos

- by 7wData

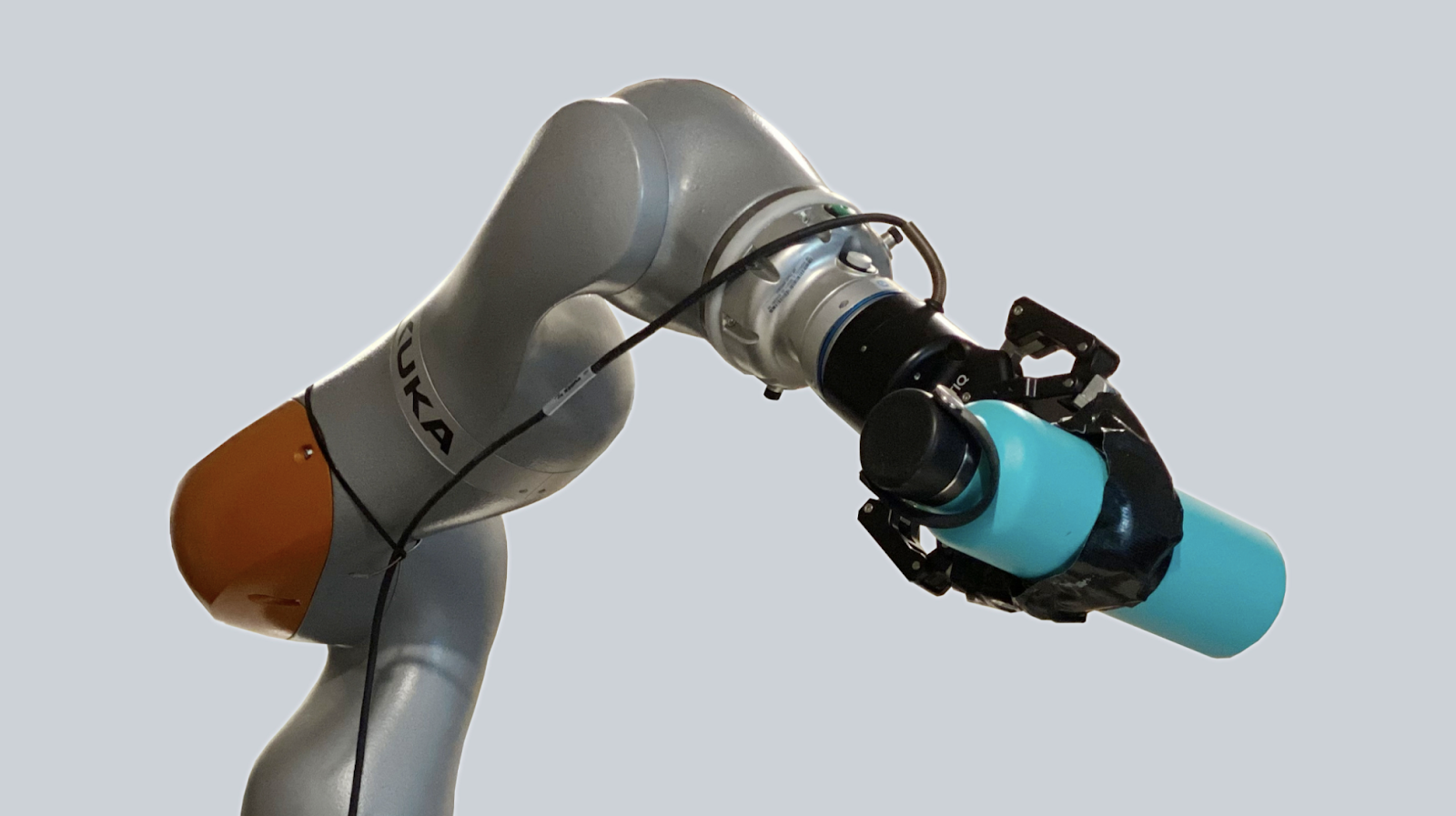

People are highly efficient at learning simple, everyday tasks — we can learn how to pick up or place a bottle on a table, for instance, just by watching a person demonstrate the task a few times. But to learn how to manipulate such objects, machines typically need hand-programmed rewards for each task. To teach a robot to place a bottle, we’d first have to tailor its reward so it learns to move the bottle upright over the table. Then we’d have to give it a separate reward focused on teaching it to put the bottle down. This is a slow and tedious iterative process that’s not conducive to real-world use, and, ultimately, we want to create AI systems that can learn in the real-world as efficiently as people can.

As a step toward this goal, we’ve created (and open-sourced) a new technique that teaches robots to learn in this manner — from just a few visual demonstrations. Rather than using pure trial and error, we trained a robot to learn a model of its environment, observe human behavior, and then infer an appropriate reward function. This is the first work to use this method — model-based inverse reinforcement learning (IRL) — using visual demonstrations on a physical robot. Most prior research using IRL has been done in simulation, where the robot already knows its surroundings and understands how its actions will change its environment. It's a much harder challenge for AI to learn and adapt to the complexities and noise of the physical world, and this capability is an important step toward our goal of building smarter, more flexible AI systems.

This achievement centers on a novel visual dynamics model, using a mix of learning from demonstration and self-supervision techniques. We also introduce a gradient-based IRL algorithm that optimizes cost functions by minimizing the distance between execution of a policy and the visual demonstrations.

The objective of IRL is to learn reward functions so that the result of the policy optimization step matches the visual demonstrations well. To achieve this in a sample-efficient manner, model-based IRL utilizes a model both to simulate how the policy will change the environment and to optimize the policy.

One of the biggest challenges in IRL is to find an objective that can be used to optimize the reward function. The effect of changing the reward signal can only be measured indirectly: First, a new policy has to be learned, and then the policy has to be simulated to predict the visual changes of the environment. Only after the second step can we compare the predicted visual changes with the visual demonstration.

[Social9_Share class=”s9-widget-wrapper”]

You Might Be Interested In

Teaching AI to manipulate objects using visual demos

30 Jan, 2021People are highly efficient at learning simple, everyday tasks — we can learn how to pick up or place a …

Teaching AI to manipulate objects using visual demos

30 Jan, 2021People are highly efficient at learning simple, everyday tasks — we can learn how to pick up or place a …

Teaching AI to manipulate objects using visual demos

30 Jan, 2021People are highly efficient at learning simple, everyday tasks — we can learn how to pick up or place a …

Recent Jobs

Do You Want to Share Your Story?

Bring your insights on Data, Visualization, Innovation or Business Agility to our community. Let them learn from your experience.

Privacy Overview

Get the 3 STEPS

To Drive Analytics Adoption

And manage change